Getting your website properly crawled and indexed by search engines like Google is fundamental to search visibility and a successful SEO strategy. Without proper indexation, even the most well-designed websites remain invisible to potential visitors searching for relevant information. Understanding how search engines discover, crawl, and index web pages empowers you to optimize your site for maximum search engine visibility.

The journey from publishing content to appearing in search engine results pages involves two critical processes that many website owners confuse: crawlability and the indexing process. When crawling your site, search engines assess technical accessibility before moving on to the indexation stage. While these concepts work together to determine your site’s search engine visibility, they represent distinct stages in how search engines interact with your content.

Crawlability Defined

Crawlability refers to how search engine crawlers access and navigate through the content on your site, ensuring your website can be crawled by Google and other search engines. When a site is crawlable, search engine bots can successfully reach your pages, follow internal links, and discover new sections within your domain. Several technical factors influence crawlability:

- Server response times and uptime reliability

- Robots.txt directives and accessibility rules

- Internal linking structures and navigation paths

- Technical barriers like authentication requirements

Indexability Defined

Indexability determines whether search engines like Google will store your crawled pages in their database for potential display in the search results, directly influencing indexing and ranking. Even if a page is successfully crawled, it may not be indexed due to quality concerns, duplicate content issues, or explicit directives like noindex tags.

The indexation process involves search engines evaluating your pages’ information for quality, relevance, and uniqueness before deciding to include them in their searchable database.

Why Crawlability Does Not Guarantee Indexation

Understanding that crawlability doesn’t automatically lead to indexation is crucial for effective SEO strategy. Data suggests that Google crawls significantly more pages than it indexes, with some estimates indicating that only 60-70% of crawled pages make it into the search index. This selective indexation emphasizes the importance of creating high-quality, unique content on your site that provides genuine value to users, as poor site content often leads to indexing issues and prevents SEO success, limiting long-term visibility and performance.

How Search Engines Crawl and Index Websites

The process of getting your content from publication to appear in the search results involves a sophisticated system of discovery, analysis, and storage, showing how indexing works across billions of web pages.

Googlebot Discovery Process

Google’s web crawler discovers new content through multiple pathways:

- Following links from already-indexed pages

- Processing submitted XML sitemaps

- Analyzing external links from other domains

- Monitoring social media mentions and references

Googlebot prioritizes URLs based on perceived importance, update frequency, and the site’s overall crawl budget allocation.

Rendering and Processing Content

Once Googlebot accesses a page, it begins rendering and understanding the information. Modern web pages often rely heavily on JavaScript for page generation, requiring sophisticated rendering capabilities. The rendering process can take anywhere from a few seconds to several minutes, depending on page complexity, meta tag setup, and page load times. It’s also important to ensure all resources remain up-to-date for accurate rendering.

Storing and Ranking in the Index

After successful rendering, Google evaluates whether the page meets indexation criteria. This evaluation considers content quality, uniqueness, user value, and compliance with quality guidelines. Pages that pass this evaluation are stored in Google’s index, where they become eligible to appear in search results based on relevance and numerous ranking factors.

How to Check if a Website is Crawled and Indexed

Monitoring your website’s crawl and indexation status is essential for maintaining optimal search engine visibility. You need to make sure your website indexed properly to avoid wasted effort. Several reliable methods allow you to verify whether search engines have successfully discovered and indexed your content.

Using Google Search Console

Tools like Google Search Console (GSC) provide the most comprehensive method to monitor your site’s performance and check if your website is indexed. Key features include:

- Coverage Report: Shows exactly which pages Google has indexed and highlights those that aren’t indexed, allowing you to take corrective action quickly.

- URL Inspection Tool: Checks individual pages with detailed crawl information

- Sitemaps Section: Compares submitted URLs versus indexed pages

site: Queries in Google

Performing site-specific searches using “site:yourdomain.com” in the Google search bar can give you a good estimate of the amount of pages on your site that are indexed. While useful for general monitoring, these queries shouldn’t be considered perfectly accurate counts of indexed pages.

Checking Cached Versions of Pages

Google’s cached page feature allows you to verify successful crawling by searching “cache:yourpageurl.com”. Cached pages provide insights into how Google interpreted your content, show the last crawl date, and can reveal whether errors or accessibility issues prevent search engines from indexing certain URLs.

Verifying via Ranking Signals

Monitoring organic traffic through Google Analytics and checking rankings for target keywords provides indirect confirmation of successful indexation. Consistent organic traffic indicates that multiple pages are indexed and accessible to users.

Common Crawl and Indexation Issues

Website owners frequently encounter specific technical and content-related issues that prevent successful crawling and indexation.

Thin or Low-Value Content

Search engines increasingly prioritize content that provides substantial value to users. Common thin content issues include:

- Automatically generated pages with minimal unique information

- Product pages lacking detailed descriptions

- Excessive pagination creating multiple low-value pages

- Content that merely aggregates information available elsewhere

Research suggests that comprehensive content (typically 1,000+ words) has better indexation rates than shorter pages, as confirmed by Backlinko’s SEO Content Study.

Duplicate Content

Duplicate content presents one of the most common indexation challenges. Internal duplicate content can result from URL parameters, printer-friendly versions, or content management systems creating multiple access paths. Such issues often confuse search engines and make it harder for a website to rank consistently. When search engines detect duplicates, they typically index only one version while ignoring others, which may confuse algorithms and prevent ranking your content effectively.

Crawl Errors and Broken Links

Technical errors during crawling can prevent search engine access. Common issues include:

- Server timeouts and DNS problems

- HTTP status code errors indicating unavailable pages

- Broken internal links preventing page discovery

- Server configuration problems during website migrations

JavaScript Rendering Issues

Complex JavaScript implementations can create indexation obstacles. Client-side rendering and heavy JavaScript dependencies may result in content that isn’t immediately visible to crawlers, causing incomplete content analysis or indexation delays.

Robots.txt and Noindex Conflicts

Improper robots.txt configuration can accidentally block important website sections. Common mistakes include blocking valuable content directories or using overly restrictive rules that prevent normal crawling operations.

Best Practices to Get Indexed Faster

Accelerating the indexation process requires implementing strategic optimizations that align with search engine preferences.

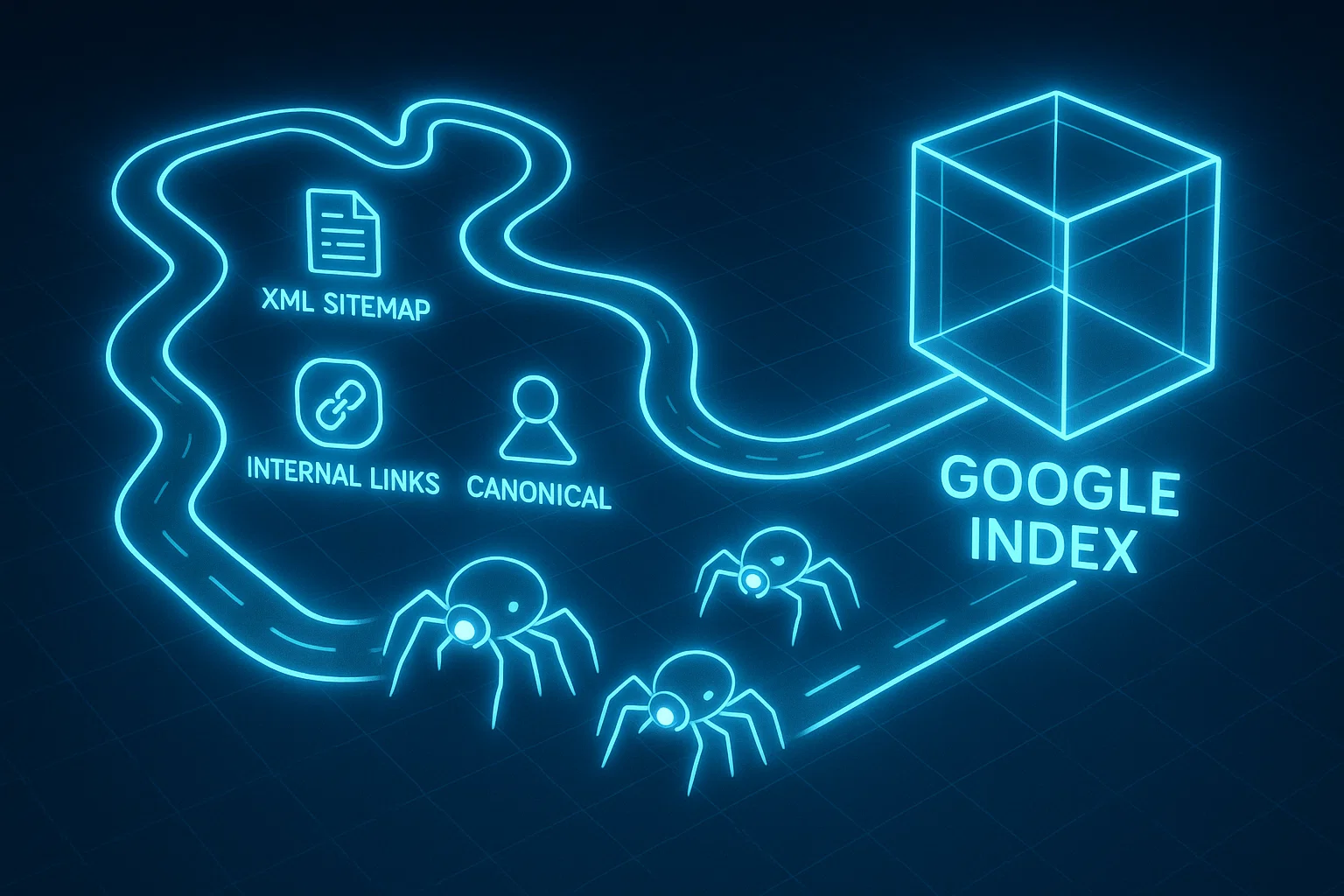

Submit XML Sitemap to Google

XML sitemaps serve as roadmaps helping search engines discover your website structure. To ensure your site is fully visible on Google, follow these steps when submitting a sitemap. Effective sitemaps should include:

- Priority indicators for important pages

- Last modification dates for content updates

- Change frequency information for crawling efficiency

- Organized structure using sitemap index files for large sites

Build Strong Internal Linking

Strategic internal linking creates clear pathways for crawlers while distributing authority throughout your website. Focus on contextual links that provide genuine user value rather than mechanical link insertions.

Optimize Page Load Speed and Server Response

Fast-loading websites receive more frequent crawling attention. Aim for server response times under 200 milliseconds and implement content delivery networks (CDNs) for consistent performance across geographic locations.

Use Canonical Tags Correctly

Canonical tags help search engines understand which version of similar content should be prioritized for indexation. Implement self-referencing canonical tags on unique pages and avoid common mistakes like creating canonical chains or using relative URLs.

Manually Submit URLs via Google Search Console

Use the URL inspection tool in Google Search Console’s interface strategically for important new content rather than submitting every page, as this provides valuable insights and helps Google index your site more effectively. Google limits manual indexation requests, making selective submission more effective than bulk submissions.

Leverage Backlinks and Mentions

High-quality backlinks from authoritative websites signal that your content deserves crawling attention. Focus on earning contextual links from industry-relevant sources, as these connections provide stronger relevance and quality signals.

Why Websites Fail to Get Indexed

Understanding root causes of indexation failures helps diagnose and resolve issues preventing content from appearing in search results.

Blocked by Robots.txt

Overly restrictive robots.txt files or incorrectly used meta tag directives are one of the primary issues that might be preventing pages from being crawled or indexed, reducing search visibility. Regular audits ensure you’re not accidentally preventing indexation of important pages through complex content management system configurations.

Lack of High-Quality Content

Search engines prioritize indexing content providing substantial user value. Pages with minimal content, poor quality, or little unique information may be crawled but rejected based on quality assessments.

No Sitemap Submitted

While search engines can discover content through link crawling, submitted sitemaps significantly improve discovery rates, particularly for new websites or recently published content.

Mobile-First Issues

Google’s mobile-first indexing means mobile versions serve as primary bases for indexation decisions. Ensure mobile websites provide the same high-quality content and user experience as desktop versions.

FAQs on Crawlability and Indexation

Crawling frequency varies based on website authority, content update frequency, and crawl budget. Popular sites may be crawled multiple times daily, while smaller sites might be crawled weekly or monthly.

Crawl budget represents pages search engines will crawl within given timeframes. Large websites need to optimize by prioritizing important pages and eliminating crawl waste from low-value URLs.

Strategic internal linking significantly enhances crawlability by creating clear pathways for crawlers. Well-structured internal networks ensure important pages receive regular crawling attention.

Indexation timeframes vary based on website authority, content quality, and crawling priorities. Established websites may see content indexed within hours, while newer sites might wait days or weeks.

Conclusion

Successfully getting your website crawled and indexed requires understanding the complex interplay between technical optimization and content quality. This in-depth guide highlights how search engines crawl and index websites and why strategic improvements ensure long-term visibility. By implementing proper technical foundations, creating high-value content, and using tools like Google Search Console to monitor your site’s performance, you ensure your website gains search visibility and supports a successful SEO strategy.

Focus on creating websites that serve user needs effectively while following technical best practices that make content easily accessible to search engines. This user-first approach, combined with proper technical implementation, provides the foundation for sustained organic search success.

FAQ on Crawl and Index in SEO

Search engines crawl web pages by following internal and external links, analyzing XML sitemaps, and processing content. After crawling your site, they evaluate quality, relevance, and uniqueness before deciding whether to include the page in the index.

Slow indexation may happen due to technical errors, thin content, duplicate pages, or lack of backlinks. To improve crawling and index speed, you need to make sure your sitemap is submitted, the server responds quickly, and all important pages are free from 404 errors.

Pages may not appear in the index due to robots.txt restrictions, noindex tags, or low-quality signals. Issues that confuse search engines, such as duplicate content or poor structure, also reduce the chance for your website to rank.

If crawlers cannot reach your pages, they aren’t indexed and won’t contribute to visibility. Good internal linking, fast performance, and correct canonical usage help ensure crawlability, which directly supports SEO success.

Ranking your content requires a balance between technical SEO and valuable information. Focus on comprehensive articles, strong backlink profiles, and an optimized structure that helps search engines rank websites more effectively.

Yes, this article serves as an in-depth guide, explaining how search engines crawl and index a site on Google, and what strategies help achieve long-term ranking improvements.